Synthetic data: definitions and use cases

Processing personal data is a heavily regulated activity, and carrying out personal data processing operations should be done with close supervision of the respective legal frameworks.

The most common alternatives to mitigate the risks posed by data processing operations are encryption or de-identification of personal data. But sometimes, these alternatives are not feasible.

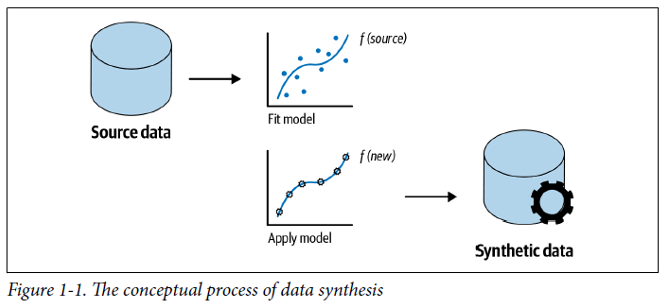

Another alternative method is to generate new data from real datasets. This process is called synthetizing data. Synthetic data is data generated from authentic data which attempts to emulate the statistical proprieties of the real datasets. If the statistical properties of the original data are sufficiently simulated, synthetic data can be used as a proxy for real data in certain use cases.

Keeping the statistical properties means that anyone analyzing the synthetic data should be able to extract identical statistical conclusions from evaluating a given dataset of synthetic data as they would, if given the real (original) data.

Because it is not real data, it will not have the same privacy risks. There is no one-to-one mapping between the records in synthetic data and the real individuals when done correctly. Therefore, it can be considered de-personalized or not personal data.[1]

Why is it essential from data protection and privacy perspective?

Retaining the statistical properties of a dataset while removing risk factors associated with the identifiability of natural persons brings countless benefits. Below there is a list of the most important ones.

First, synthetic data provides efficient access to data when it is impossible to obtain data subjects’ consent. Accessing vast amounts of data is critical to developing AI systems: to train, test, and validate AI models. More broadly, data is also needed to evaluate AI technologies that others have created and test AI software applications or applications that incorporate AI models. In general, companies collect data for a purpose, so if developers plan to use it for other purposes, they must obtain consent from individuals. Data synthesis can provide analysts with realistic data to work relatively efficiently and at scale.

Second, synthetic data enables better analytics. We can use synthetically generated datasets: when real data does not exist (e.g., when modeling something completely new), when real data exists but is not labeled (e.g., in ML supervised learning), and when data is not yet accessible, to train a model. Also, developers may use synthetic data models to validate their assumptions and demonstrate the kind of results that we can obtain with their models.

Third, synthetic data can be used as a proxy of real data. Where the utility of the synthetic data is high, analysts can get results with synthetic data similar to what they would have with authentic data.

Fourth, synthetic data reduces the costs of implementing controls. We can use synthetic data when the costs of implementing security and privacy controls to process anonymized data are too high.

Fifth and final, synthetic data reduces the regulatory compliance burden. We can also use synthetic data when processing personal data is costly or too burdensome. For example, it allows the training of AI models in a less privacy-intrusive manner because the data used in the training process does not directly refer to an identified or identifiable person. Additionally, it could be an alternative to enhance privacy in data transfers. We consider synthetic data a privacy-enhancing technology (PET), so it might be an additional measure for data transfers outside the EU or within organizations that do not need to identify a specific person.

In which sectors do we use synthetic data?

The following list provides an overview of some of the potential uses for synthetic data.

- Manufacturing and distribution: Training robots to perform complex tasks in the production line or warehouses can be challenging because of the need to obtain realistic training data covering multiple-anticipated and non-anticipated scenarios (e.g., recognizing objects under different lighting conditions, with different textures, in various positions). Manufacturers and other organizations can use synthetic data for this purpose.

- Healthcare: Obtaining datasets to build AI modes in the health industry is difficult due to the stringent regulations concerning the protection of special categories of data. Obtaining consent from the patient, implementing strong security measures to protect the data, and collecting enough information to build a reliable model are challenges that synthetic data can help overcome.

- Financial services: getting access to large volumes of historical market data in the financial services industry or consumer transactions is challenging and costly. In financial services, synthetic data can help test internal software applications (e.g., fraud detection) and evaluate that those applications can scale their performance to handle the large volume of data they are likely to be met in practice.

- Transportation: synthetic data can also be helpful for the transportation industry. Two applications are particularly relevant for this purpose. Firstly, organizations can use synthetic data in microsimulation models. Microsimulation environments allow users to do ‘what if’ analysis and run novel scenarios. These simulation environments become attractive when there is no data available; therefore, we need to create synthetic data. Secondly, synthetic data helps autonomous vehicles expand data availability for object identification.

When to use synthetic data?

In deciding to implement data synthetization, we should consider the PET alternatives to synthetic data. These alternatives are de-identification and pseudonymization.

But before deciding which technique the company should use, it is crucial to be aware of the concept of the identifiability spectrum.

We can consider identifiability as the probability of assigning a correct identity to a record in a dataset. Because it is a probability, it varies from 0 to 1. At one end of this spectrum is perfect identifiability (probability of 1), and at the other, it is impossible to assign an identity to a record correctly (probability of 0). Any dataset can have a probability of identification along this spectrum.

Organizations should also consider the trade-off between data privacy and data utility. If the organization wants to achieve a higher level of privacy, they have to accept a lower level of utility. The maximum utility would be the original data without any transformations or controls, but this will have the minimum amount of privacy and vice-versa.

But in general, to comply with privacy regulations worldwide, there is a further need to implement controls to manage the risk and protect the data.

Organizations, in general, take a holistic approach and decide about which PET to adopt based on the following criteria:

- Privacy: the extent of data protection that comes down to whether the threshold is acceptable and whether the organization is convinced that the actual identification of the data is below the threshold

- Data utility: the extent to which the data achieves the business objectives

- Operational costs: the costs of acquiring and implementing the technology and the costs of the controls that organizations must put in place to use the synthetic data

- Consumer trust: the willingness of individuals to continue to transact with the organization when using synthetic data

- Level of transformation of the original data: if the original data is significantly transformed, it may lose utility value.

Implementing more controls will increase privacy protection, consumer trust, data utility, and operational costs. More transformation of the original data will also increase privacy protection and consumer trust, but it may decrease data utility.

Is synthetic data the solution to all the problems?

Of course not. Using synthetic data to gain insights or train AI models is not the panacea or the silver bullet. It also has some limitations that are worth exploring.

- Risk of reidentification. As previously seen, synthetic data requires making trade-offs between privacy and utility. The more a synthetic dataset emulates the original dataset, the more utility it will have for analysts, but the more personal data it may reveal. That increases the compliance burden and the need to implement more controls to protect personal data.

- Adversarial machine learning. There are situations where an attacker attempts to exert influence over generating synthetic data to force leakage. While it requires both the possession of the synthetic dataset and access to the model used to create synthetic data, the attacker can uncover the original information from the dataset. These risks of membership inference are more acute when revealing information about outlier records (i.e., data with characteristics that stand out among other documents).

- Non-universality. There will always be situations when the demands of individuality will not be satisfied by any privacy-preserving technique.[2]

How can these challenges be addressed?

One essential consideration to bear in mind is that not every dataset synthetically generated is privacy-preserving.

When a generative model is trained without applying any form of data sanitization during or after training, the resulting data is called “Vanilla synthetic data.”[3] This kind of synthetic data is prone to data leakage. We argue that vanilla synthetic data does not guarantee that a dataset is 100% free of all actual identifiers (under HIPAA) and that it does not entirely insulate privacy (as required by CCPA or GDPR).[4]

A solution may be to use differential privacy in combination with synthetic data generation. We gain utility via synthetic data generation and privacy through differential privacy.[5] Differentially private synthetic data is one of the most robust privacy-preserving methods for synthetic data.[6]

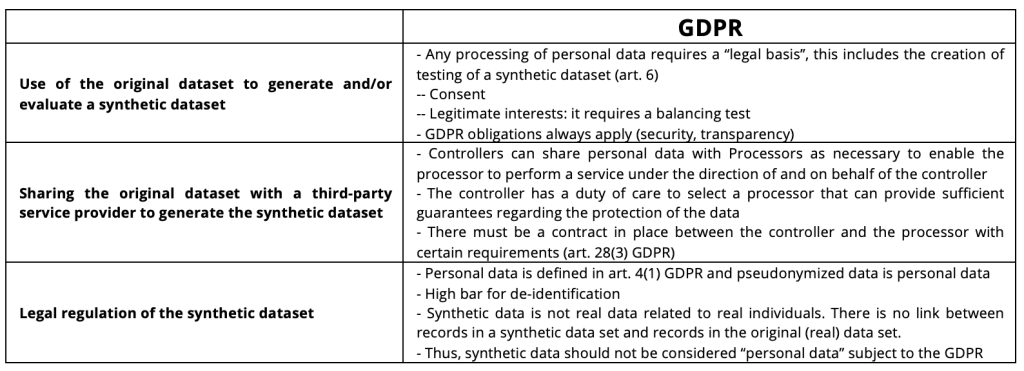

What legal framework regulates the generation, sharing, and use of synthetic data?

Below a table summarizes the most important provisions of the GDPR concerning the generation, the sharing of data to build a synthetic dataset, and the use of synthetic data.[7]

In summary

Why synthetic data?

- Getting access to good quality realistic data for AI/ML projects is difficult (time-consuming and costly, and in some cases not possible)

- Data protection regulations and cross-border data transfers concerns are significant factors causing these difficulties.

- Sometimes data is available, but the security levels needed to process that data are high, increasing the cost and reducing flexibility. That slows down the ability to build, test, and evaluate models and analytics technologies.

- Data synthesis makes realistic data available for secondary purposes quickly.

When should an organization use synthetic data?

- AI development: making data available to internal analysis and external collaborators for model development and testing

- Software testing: performance and functional testing when access to production data is not open or possible

- Technology evaluation: evaluating analytics and data wrangling technologies (from and by start-ups and academics)

References

[1] European Data Protection Supervisor “synthetic data” https://edps.europa.eu/press-publications/publications/techsonar/synthetic-data_en

[2] Steven M. Bellovin et al., Privacy and Synthetic Datasets, 22 STAN. TECH. L. REV. 1 (2019) page 4

[3] Idem p.41

[4] Idem p. 44 and 47

[5] Idem p. 38

[6] For more on how differential private synthetic data is generated, see NIST https://www.nist.gov/blogs/cybersecurity-insights/differentially-private-synthetic-data

[7] The table is adapted from the research made by Khaled El Emam, Lucy Mosquera & Richard Hoptroff in Practical Synthetic Data Generation. Balancing Privacy and the Broad Availability of Data (O’Reilly 2020).